In the rapidly changing landscape of artificial intelligence, Alibaba has made waves yet again with the launch of WAN2.1, the newest AI-driven video generation model from the company. This revolutionary technology is set to change the way we produce, consume, and engage with video. Whether you are a content creator, marketer, gamer, or fan of the entertainment industry, WAN2.1 provides a look into the future of AI-generated video. In this blog post, we’ll dive deep into what WAN2.1 is, its key features, how it stacks up against competitors, and its potential applications across industries.

Table of Contents

What is WAN2.1 and Why is it Important?

WAN2.1 is Alibaba’s next-generation AI model designed to generate high-quality, realistic videos from text, images, or even rough sketches. Building on the success of its predecessor, WAN2.0, this new version introduces significant advancements in video quality, speed, and versatility.

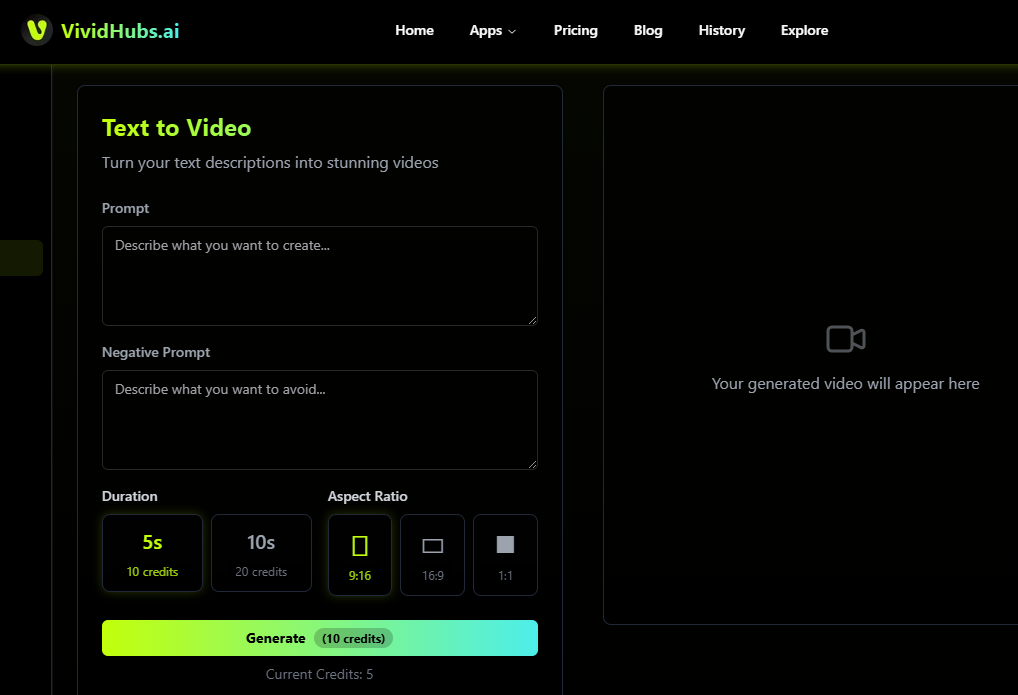

You may try to generate video : Click Here which has been integrated by vivid hubs using open source code

— Wan (@Alibaba_Wan) February 24, 2025

Video is the monarch of the modern digital age. Social media, corporate marketing campaigns, the demand for engaging, high-quality videos is booming. But traditional video creation is expensive, time-consuming, and labor-intensive. This is where WAN2.1 comes into the picture. With the power of AI, it enables one to create professional-grade videos in minutes and pennies, bringing video creation within everyone’s reach, whether it is businesses or individuals.

Key Features and Improvements Over Previous Models

WAN2.1 isn’t just an incremental update; it’s a game-changer. Here are some of its standout features and improvements:

1. Enhanced Video Quality

WAN2.1 produces videos with unparalleled clarity, resolution, and realism. The model uses advanced neural networks to generate lifelike visuals, making it difficult to distinguish between AI-generated and human-created content.

2. Faster Processing Speeds

One of the most significant upgrades in WAN2.1 is its ability to generate videos at lightning speed. This is a major improvement over WAN2.0, which, while impressive, had longer rendering times.

3. Improved Contextual Understanding

WAN2.1 excels at understanding complex prompts and translating them into coherent video sequences. Whether it’s a detailed script or a simple idea, the model can interpret and execute it with remarkable accuracy.

4. Multi-Modal Input Support

Unlike many AI video tools that rely solely on text prompts, WAN2.1 supports multiple input formats, including images, sketches, and even audio. This flexibility opens up new creative possibilities for users.

5. Customizable Outputs

With WAN2.1, users can fine-tune various aspects of the video, such as style, tone, and pacing, to align with their specific needs. This level of customization was limited in earlier versions.

Sponsored Content: Are you looking for a reliable hosting provider in Nepal? Click the banner below to purchase your domain and hosting, and take your business to new heights!

How WAN2.1 Compares to Other AI Video Generation Tools?

The AI video generation space is becoming increasingly competitive, with tools like OpenAI’s Sora, Runway, and Pika making waves. So, how does WAN2.1 stack up?

WAN2.1 vs. Sora

While Sora is known for its ability to generate highly detailed and imaginative videos, WAN2.1 focuses on practicality and speed. WAN2.1’s multi-modal input support gives it an edge in versatility, making it a better choice for users who need to work with diverse media formats.

WAN2.1 vs. Runway

Runway is a popular choice for creatives due to its user-friendly interface and extensive editing tools. However, WAN2.1 surpasses Runway in terms of video quality and processing speed, making it ideal for professionals who require high-output efficiency.

WAN2.1 vs. Pika

Pika is renowned for its simplicity and ease of use, but it lacks the advanced features and customization options offered by WAN2.1. For users seeking a more robust and flexible solution, WAN2.1 is the clear winner.

Potential Use Cases for WAN2.1

The applications of WAN2.1 are virtually limitless. Here are some of the most promising use cases:

1. Content Creation

YouTube creators, bloggers, and social media influencers can use WAN2.1 to produce engaging video content without the need for expensive equipment or editing software.

2. Marketing and Advertising

Brands can leverage WAN2.1 to create personalized video ads, product demos, and promotional content tailored to their target audience.

3. Gaming

Game developers can use WAN2.1 to generate realistic cutscenes, trailers, and in-game animations, enhancing the overall gaming experience.

4. Entertainment

Filmmakers and animators can utilize WAN2.1 to streamline the production process, from storyboarding to final edits.

5. Education

Educators can create interactive video lessons and tutorials, making learning more engaging and accessible.

In recent weeks, rumors about Tesla’s alleged entry into the smartphone market with a device called the “Tesla Phone Pi” have taken social media by storm. Excited claims about a revolutionary smartphone featuring solar charging, direct Starlink internet connectivity, and even compatibility with Earth, the Moon, and Mars have been circulating widely. But how much truth is there behind these bold assertions? Are you buying tesla mobile and want t know tesla phone pi launch?

Can you imagine a world without the internet? It’s something we use every day—whether it’s for work, chatting with friends, watching videos, or shopping. But what if, one day, the internet disappeared forever from the world and never return back? How would life change for everyone?

OpenAI has officially launched Sora, its groundbreaking video-generating tool, which is now available to ChatGPT Plus and Pro users. A new version of Sora—Sora Turbo—is significantly faster than the model previewed in February. It is being released in December 2024 as a standalone product at Sora.com for ChatGPT Plus and Pro users. After months of research and development, OpenAI Sora brings a new wave of creativity by allowing users to generate videos from text prompts, similar to how OpenAI’s DALL·E generates images. Here’s everything you need to know about this exciting release.

Become a Software Engineer After 40: Yes, you absolutely can! Many people from non-technical backgrounds successfully transition into software engineering later in life. In this article, we’ll explore why it’s never too late, how to make the switch, and address the common concerns surrounding this career change.

Technical Details: How WAN2.1 Leverages AI for Video Generation

At its core, WAN2.1 is powered by a combination of Generative Adversarial Networks (GANs) and Transformer-based models. These technologies work together to analyze input data, generate video frames, and ensure consistency across sequences.

GANs for Realism

GANs are responsible for the lifelike quality of WAN2.1’s videos. By pitting two neural networks against each other—one generating content and the other evaluating it—the model continuously improves its output.

Transformers for Contextual Understanding

Transformers enable WAN2.1 to understand and interpret complex prompts, ensuring that the generated videos align with the user’s intent.

Real-Time Rendering

WAN2.1’s real-time rendering capabilities are made possible by optimized algorithms and cloud-based infrastructure, allowing for seamless video generation even on lower-end devices.

Future Implications of WAN2.1

The release of WAN2.1 marks a significant milestone in the AI video industry. As the technology continues to evolve, we can expect to see:

- Increased Accessibility: AI video tools like WAN2.1 will become more affordable and user-friendly, empowering individuals and small businesses to create professional content.

- Enhanced Creativity: With AI handling the technical aspects of video production, creators can focus on storytelling and innovation.

- New Business Models: The rise of AI-generated content could lead to new revenue streams, such as subscription-based video libraries or on-demand video services.

FAQs About WAN2.1: Alibaba’s AI-Powered Video Generation Model

Here are some frequently asked questions about WAN2.1 to help you better understand this groundbreaking technology:

1. What is WAN2.1?

WAN2.1 is Alibaba’s latest AI-powered video generation model that creates high-quality, realistic videos from text, images, sketches, or audio inputs. It builds on the success of its predecessor, WAN2.0, with significant improvements in speed, quality, and versatility.

2. Who can benefit from using WAN2.1?

WAN2.1 is designed for a wide range of users, including:

– Content creators (YouTubers, bloggers, influencers)

– Marketers (for ads, product demos, and promotional content)

– Game developers (for cutscenes, trailers, and animations)

– Filmmakers and animators1

– Educators (for interactive video lessons)

3. Can WAN2.1 replace traditional video production?

While WAN2.1 significantly reduces the time and cost of video production, it is not a complete replacement for traditional methods. Instead, it serves as a powerful tool to complement and enhance existing workflows, especially for tasks requiring quick turnarounds or high customization.

4. What are the system requirements for using WAN2.1?

WAN2.1 is optimized for cloud-based infrastructure, meaning it can run on lower-end devices. However, a stable internet connection is required for real-time rendering and processing.

5. Is WAN2.1 available to the public?

As of now, WAN2.1 is primarily available to enterprise clients and developers through Alibaba’s cloud services where it is already open source in hugging face and can be used in your local machine. However, Alibaba may release a more accessible version for individual users in the future.

6. Is WAN2.1’s content 100% original?

Yes, WAN2.1 generates unique videos based on user inputs, ensuring originality. However, users should ensure that their inputs (text, images, etc.) do not infringe on copyright or intellectual property laws.

Conclusion: The Future of Video Creation is Here

Alibaba’s WAN2.1 is not just a piece of equipment; it is a glimpse into the future of video production. With its capabilities, record-breaking quality level, and broad range of applications, it’s poised to revolutionize industries and redefine how we create and watch video.

Are you future-ready? Discover WAN2.1 today and see how it can transform your creative process. Share your thoughts below or swing by and chat with us on social media to join the conversation. The AI era of video making is here—don’t get left behind!